Insights

03 Dec, 2024

How Snacking is Embracing the Product Age

Read More

Professor David Thomson

03 Nov, 2022 | 4 minutes

It was with great interest that we recently read an article in The Guardian (UK) entitled “Information Commissioner warns firms over ‘emotional analysis’ technologies”, as many of the views expressed in the piece echoed our own experiences (both first hand and anecdotal) with methods that purport to ‘measure emotion’.

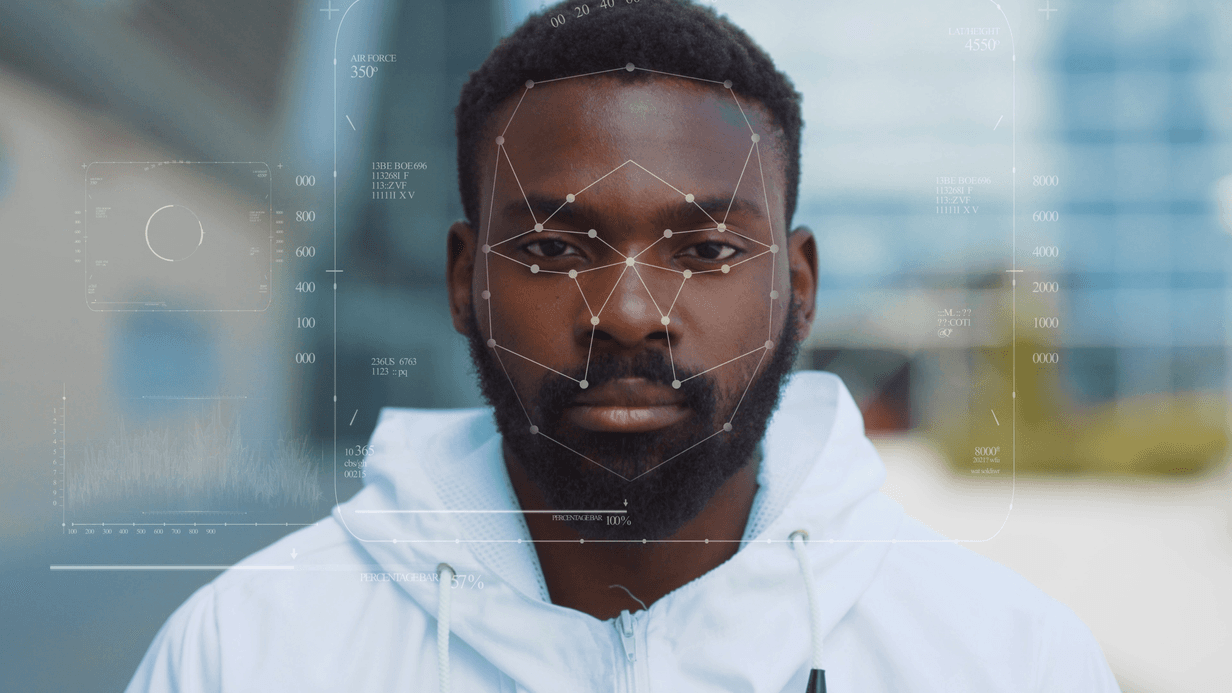

Whilst we need no convincing about the importance of emotion in delivering motivating brand and product experiences, we’ve long doubted the validity of using facial coding, amongst other biometric methods, and neuroscience tools, as a means of ‘measuring’ emotion. This is because all of these methods are based on the premise that there’s a direct causal relationship between our emotions and our physiology and consequently, the latter can be used to predict the former. Increasingly, this is being recognized as false premise. This being so, it’s hardly surprising that when these methods are incorporated into consumer and product research, they usually provide very dubious results that are difficult to interpret and act upon. Added to this, it’s practically impossible to use these methods in the sort of ‘natural’ setting that’s essential in our heartland areas of product and pack development.

That said, we know that understanding ‘emotion’ is often thought of as the holy grail by our clients, in their ongoing quest to identify a way to better-understand how to create propositions which will truly engage consumers and ultimately drive long-term sales.

Whilst we’ve always been keen to share our reservations about these biometric and neuroscience techniques with enquiring clients, the dire warning issued recently by the UK Government’s Information Commissioner’s Office (ICO) that these ‘emotional analysis’ techniques are based on a ‘false premise’ and should not be relied on for anything more than a bit of ‘fun’, has definitely raised the stakes. This, coupled with powerful new developments in our Chairman, Professor David Thomson’s own research methodologies, means it’s time for us to take a stand and shout from the rooftops:

“You can’t predict emotions from facial expressions…or any other physiological measures!”

You can read the Guardian article here and the more detailed ICO report here. You can also listen to Deputy Information Commissioner, Stephen Bonner’s hard-hitting interview here, which was first broadcast by the BBC’s World Service on 31/10/2022. It’s brutal and should leave nobody in any doubt that the ICO considers this approach to emotion measurement as nothing more than ‘junk science’. We agree!

For those who may require further justification of the stance taken by the UK’s ICO, we recommend reading this thought piece published recently in Scientific American by neuroscientist Professor Lisa Feldman Barrett of Northeastern University, Boston, or listen to her 2018 Ted talk. With over 25 years’ experience in analyzing facial maps, brains scans and other neurophysiological studies, Professor Barrett concludes:

“Emotion AI systems, therefore, do not detect emotions. They detect physical signals, such as facial muscle movements, not the psychological meaning of those signals.”

As with the UK’s ICO, she doesn’t mince her words when it comes to those that market and sell this type of ‘junk science’:

“It is time for emotion AI proponents and the companies that make and market these products to cut the hype and acknowledge that facial muscle movements do not map universally to specific emotions. The evidence is clear that the same emotion can accompany different facial movements and that the same facial movements can have different (or no) emotional meaning.”

In spite of these hard-hitting comments, this is not an easy message, particularly when going up against the full force of VC/PE funded marketing campaigns from the various tech companies pushing their facial coding and other biometric solutions, against some of the more traditional agencies that have jumped onto the ‘tech bandwagon’, and even some CPG companies that have invested heavily in this space.

We’d be delighted to discuss with you the alternatives we’ve been working on – the philosophy and practical solutions which are scientifically grounded (published in credible, peer-reviewed scientific journals) – allowing us to access a deeper understanding of consumer purchasing behavior.

And in the meantime, we’ll leave you with this quotation from Stephen Bonner, Deputy Commissioner of the ICO, here:

“I think we’d all love it if there were technologies that could solve some of these big difficult questions, but all the scientists we talk to tell us this doesn’t work. And it’s not that it’s a difficult technology that’s going to be cracked one day, it’s that there’s no meaningful link between our inner emotional state and the expression of that on our faces. So technologies that claim to be able to infer or understand our emotions are fundamentally flawed.”